NVIDIA Ampere Architecture

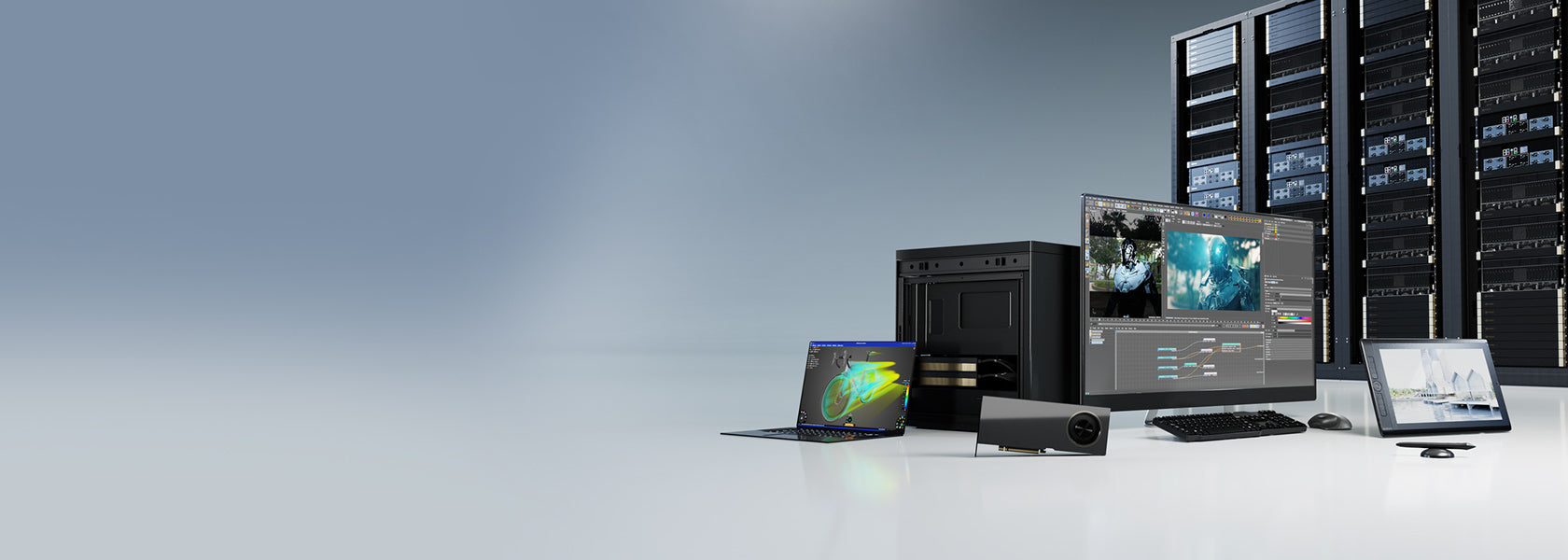

The NVIDIA Ampere architecture is a cutting-edge technology designed to meet the demands of elastic computing in modern data centres. It boasts six ground-breaking innovations, including the third-generation Tensor Cores, Multi-Instance GPU (MIG), third-generation NVLink, structural sparsity, second-generation RT Cores, and smarter and faster memory.

The third-generation Tensor Cores are built upon the NVIDIA Volta™ architecture, providing unmatched acceleration to AI by reducing training times from weeks to hours. With Tensor Float 32 (TF32) and floating point 64 (FP64) precisions, Tensor Cores can accelerate AI adoption and extend the power of Tensor Cores to HPC. TF32 delivers speedups of up to 20X for AI, and with support for bfloat16, INT8, and INT4, Tensor Cores create an incredibly versatile accelerator for both AI training and inference.

Multi-Instance GPU (MIG) is a feature that enables workloads to share the GPU, allowing each GPU to be partitioned into multiple GPU instances, fully isolated, and secured at the hardware level with their own high-bandwidth memory, cache, and compute cores. This feature brings breakthrough acceleration to all applications, big and small, and guarantees quality of service. IT administrators can offer right-sized GPU acceleration for optimal utilisation and expand access to every user and application across both bare-metal and virtualized environments.

The third-generation NVLink doubles the GPU-to-GPU direct bandwidth to 600 gigabytes per second (GB/s), almost 10X higher than PCIe Gen4. When paired with the latest generation of NVIDIA NVSwitch™, all GPUs in the server can talk to each other at full NVLink speed for incredibly fast data transfers. NVIDIA DGX™A100 and servers from other leading computer makers take advantage of NVLink and NVSwitch technology via NVIDIA HGX™ A100 baseboards to deliver greater scalability for HPC and AI workloads.

Modern AI networks are getting bigger, with millions and in some cases billions of parameters. Not all of these parameters are necessary for accurate predictions and inference, and some can be converted to zeros to make the models “sparse” without compromising accuracy. The NVIDIA Ampere architecture’s Tensor Cores can provide up to 2X higher performance for sparse models, improving the performance of model training.

The second-generation RT Cores in the NVIDIA A40 deliver massive speedups for workloads such as photorealistic rendering of movie content, architectural design evaluations, and virtual prototyping of product designs. RT Cores also speed up the rendering of ray-traced motion blur for faster results with greater visual accuracy and can simultaneously run ray tracing with either shading or denoising capabilities.

A100, the NVIDIA Ampere architecture’s flagship product, brings massive amounts of compute to data centres. It boasts a class-leading 2 terabytes per second (TB/sec) of memory bandwidth, more than double the previous generation, and significantly more on-chip memory, including a 40-megabyte (MB) level 2 cache—7X larger than the previous generation—to maximize compute performance.

To learn more about NVIDIA Ampere Architecture click here